LayerMerge is a novel method to make convolutional neural networks (CNNs) more efficient without losing performance. Traditional methods for reducing network depth usually follow one of two approaches:

1. Pruning Convolution Layers: Aggressively removes parameters, risking loss of important information.

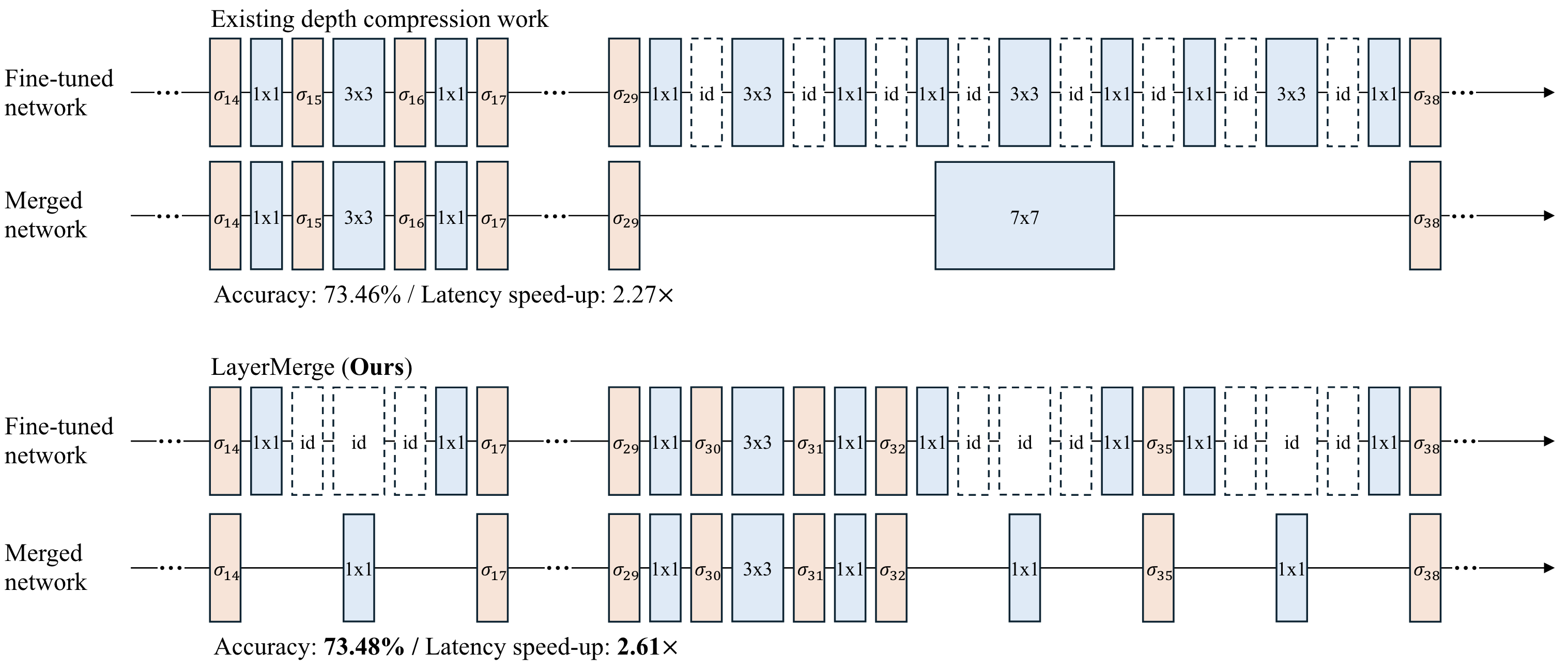

2. Pruning Activation Layers and Merging Layers: Eliminates redundant activation layers and merges resulting consecutive convolution layers, potentially increasing kernel sizes and negating speed gains.

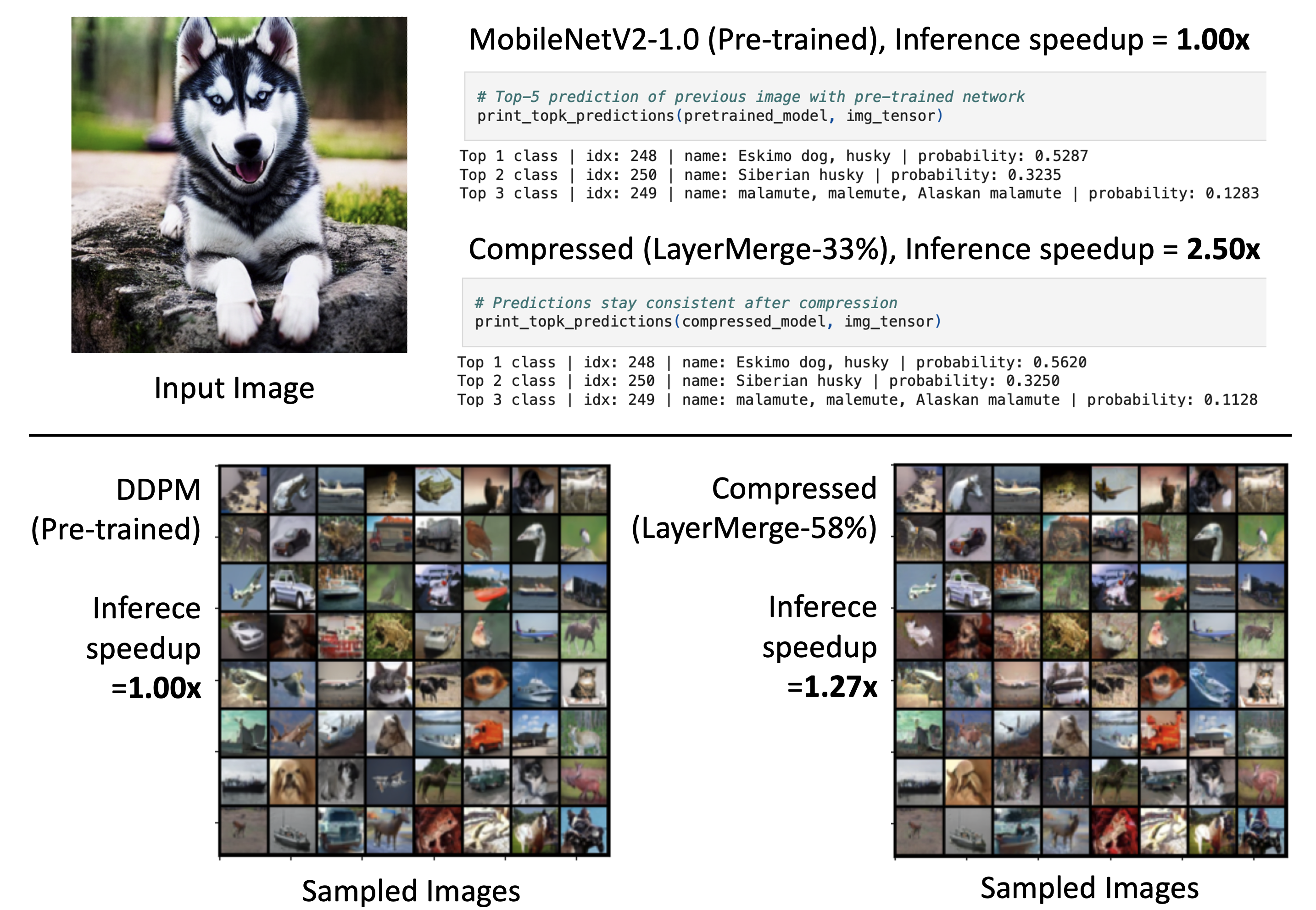

LayerMerge addresses these issues by jointly pruning convolution layers and activation functions. It optimizes which layers to remove, speeding up inference while minimizing performance loss.

Since this selection process involves an exponential search space, we formulate a novel surrogate optimization problem and efficiently solve it via dynamic programming.

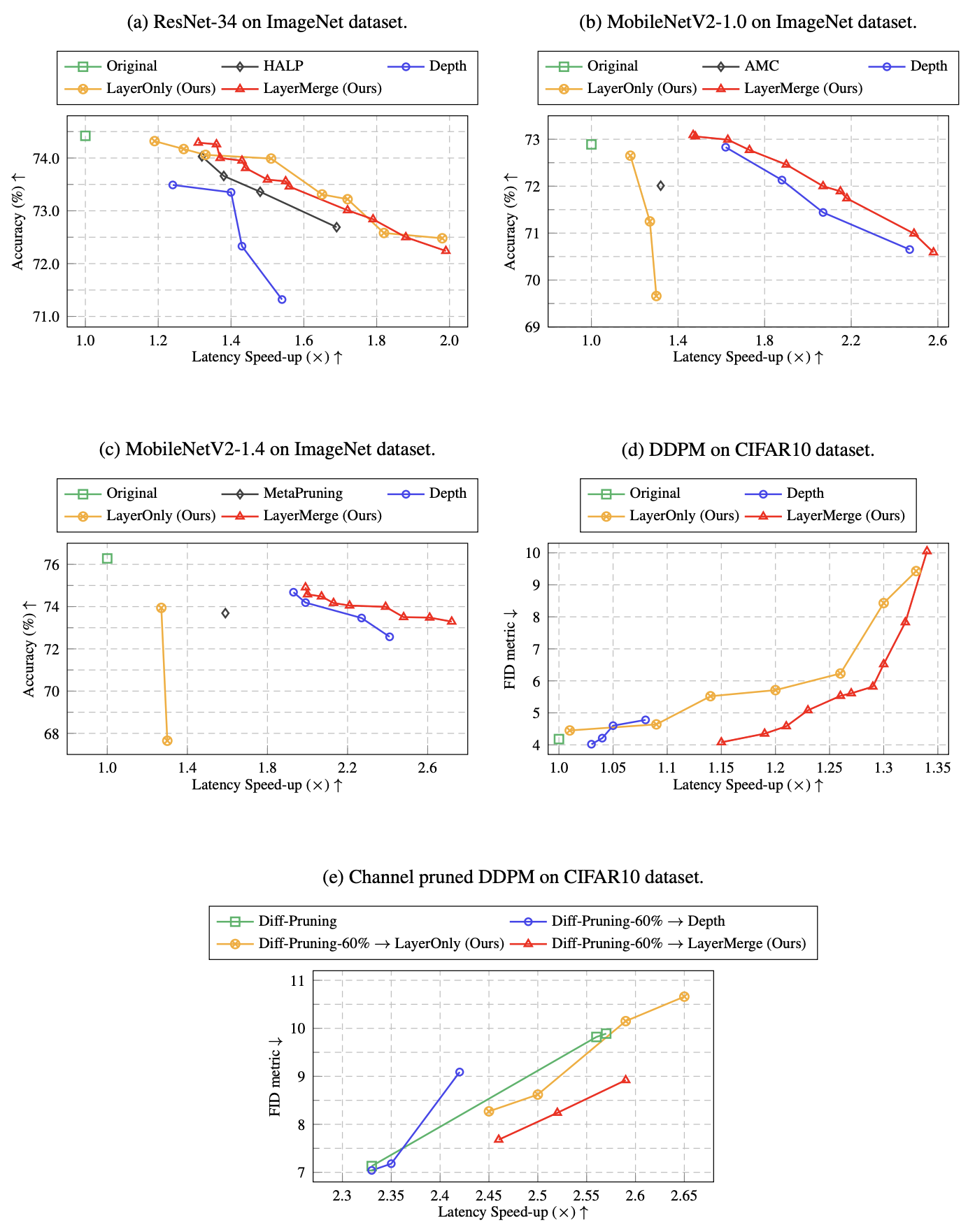

Our results show that LayerMerge outperforms current methods for reducing network depth in tasks including image classification and generation.