|

Hello! I am a 2nd year PhD student at Seoul National University, Computer Science department, Machine Learning Lab, advised by Hyun Oh Song. My research interests lie in constructing efficient machine learning system by solving tractable discrete and continuous optimization problem. I received my Bachelor's degree in Statistics from Seoul National University in 2023. |

|

|

|

|

|

|

|

|

|

|

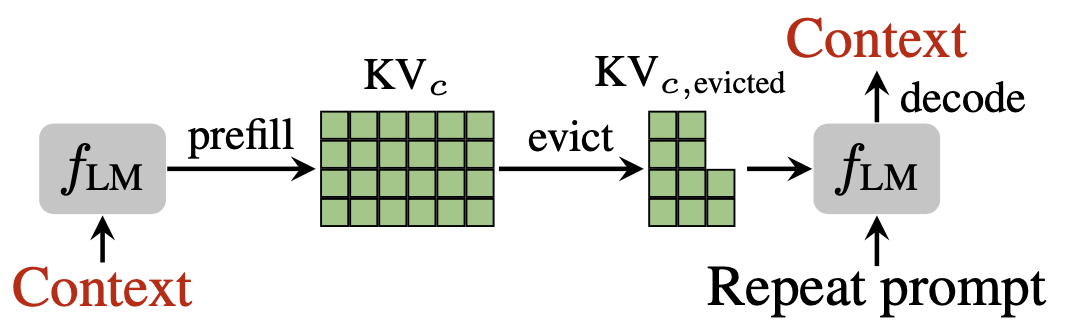

Jang-Hyun Kim, Jinuk Kim, Sangwoo Kwon, Jae W. Lee, Sangdoo Yun, Hyun Oh Song arXiv, 2025 Paper | Code | Project page | Bibtex We propose KVzip, a query-agnostic KV cache eviction method enabling effective reuse of compressed KV caches across diverse queries. Evaluations demonstrate that KVzip reduces KV cache size by 3-4x with negligible performance loss. |

|

Jinuk Kim, Marwa El Halabi, Wonpyo Park, Clemens JS Schaefer, Deokjae Lee, Yeonhong Park, Jae W. Lee, Hyun Oh Song ICML, 2025 Paper | Code | Project page | Bibtex We propose GuidedQuant, a post-training quantization approach that integrates gradient information from the end loss into the layer-wise quantization objective. Additionally, we introduce LNQ, a non-uniform scalar quantization algorithm which is guaranteed to monotonically decrease the quantization objective value. |

|

Jinuk Kim, Marwa El Halabi, Mingi Ji, Hyun Oh Song ICML, 2024 Paper | Code | Project page | Poster | Bibtex We propose LayerMerge, a depth compression method that selects which activation layers and convolution layers to remove, to achieve a desired inference speed-up while minimizing performance loss. |

|

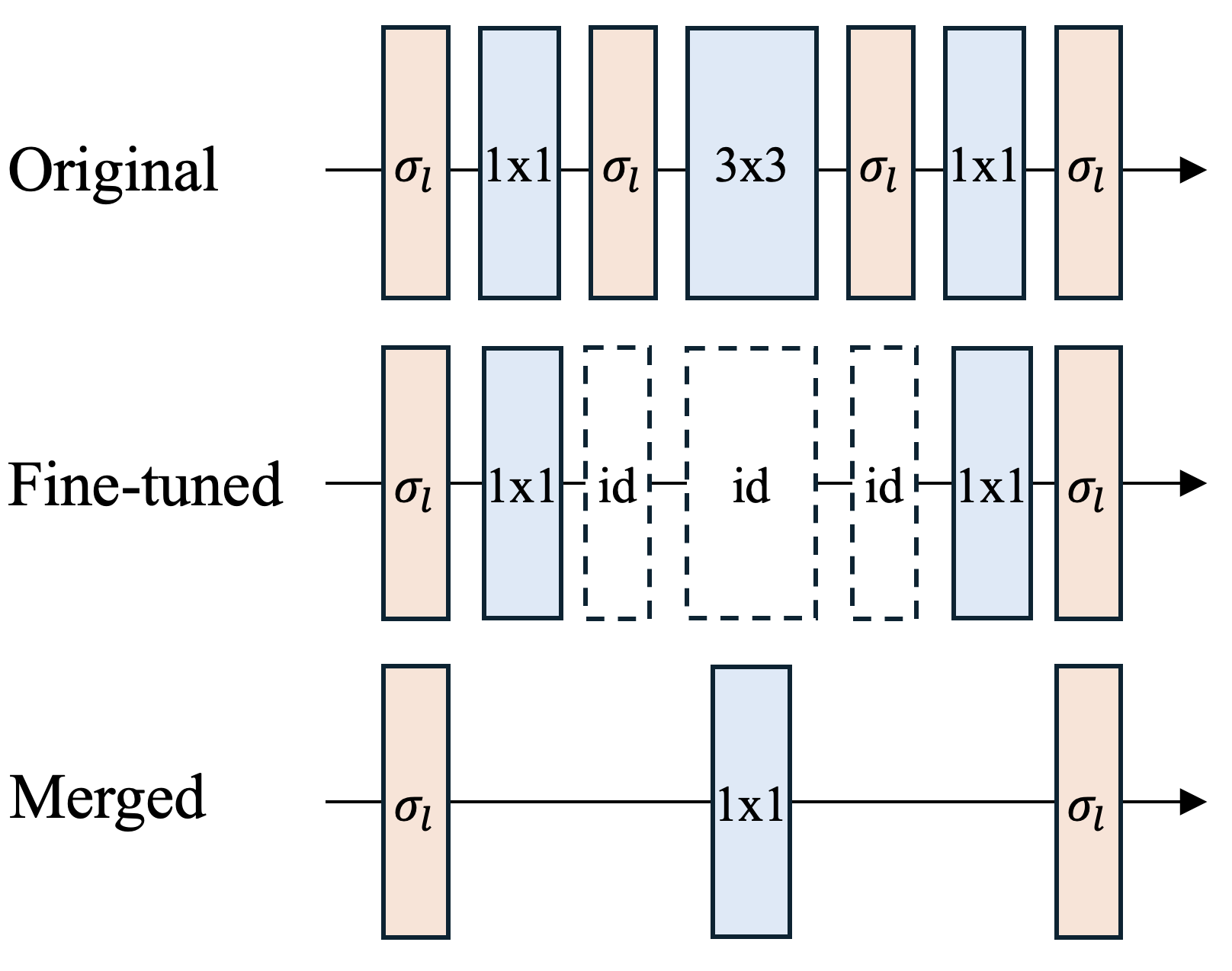

Jinuk Kim*, Yeonwoo Jeong*, Deokjae Lee, Hyun Oh Song ICML, 2023 Paper | Code | Blog | Bibtex We propose a subset selection problem that replaces inefficient activation layers with identity functions and optimally merges consecutive convolution operations into shallow equivalent convolution operations for efficient inference latency. |

|

Jang-Hyun Kim, Jinuk Kim, Seong Joon Oh, Sangdoo Yun, Hwanjun Song, Joonhyun Jeong, Jung-Woo Ha, Hyun Oh Song ICML, 2022 Paper | Code | Bibtex We propose a dataset condensation framework that generates multiple synthetic data with a limited storage budget via efficient parameterization considering data regularity and develop an effective optimization technique. |

|

|

|

Code Android/iOS service which collects notices from website of SNU departments and gather them (Android / Aug 2021 / 100+ MAU / 80+ WAU / 1000+ Downloads). |

|

|

Last updated: June 22, 2025. Template based on Jon Barron's website. |